In the context of quantum computing, we often hear that RSA and ECDH are “quantum broken,” but AES is still “quantum-safe,” even though virtually all AES keys are distributed using RSA or ECDH. There are lots of asterisks; let’s take a look.

A common misconception today is the assertion that “AES is secure even against quantum computers.” The reasoning usually goes like this: Grover’s algorithm only gives a square-root speedup, so an attacker still needs ~2¹²⁸ effort to brute-force AES-256. Therefore, AES remains safe. But this view is misleading, because it focuses solely on the mathematical core of AES and ignores the surrounding system. In practice, almost all real-world failures involving AES have nothing to do with cryptanalysis or supercomputers. They come from misuse, broken randomness, incorrect modes, device bugs, API pitfalls, and dangerous architectural assumptions. Long before a practical quantum computer arrives, AES already fails every day because systems rely too heavily on a single algorithm and its flawless deployment.

AES Fails Constantly in Practice, Without Quantum

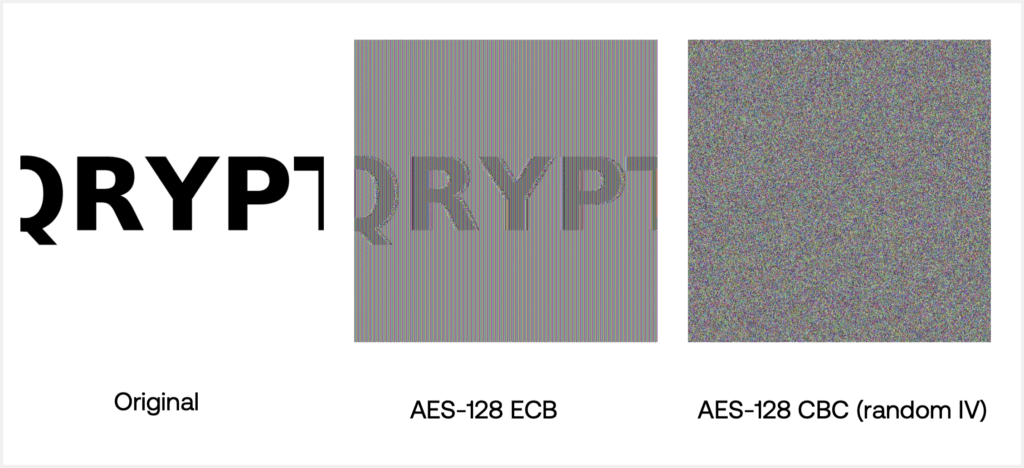

Consider how frequently AES confidentiality has been compromised by implementation errors alone. AES-ECB is the canonical example: because identical 16-byte blocks encrypt to identical ciphertext blocks, structural information in images, documents, and binary formats leaks directly through encryption. In earlier demonstrations, a large “QRYPT” image encrypted under ECB still visibly resembled the original, despite being encrypted with a mathematically strong cipher. AES didn’t break. Our simplistic assumption that ECB “should be good enough” did.

CBC is often positioned as an improvement over ECB, but even CBC fails when used with a static or repeated IV. Encrypting the same QRYPT image twice under AES-CBC with an all-zero IV did not produce ciphertexts whose structure was visibly correlated with the plaintext; each ciphertext individually looked like random noise. The failure instead was that the two ciphertexts were identical, revealing the deterministic scheme. Because CBC’s security depends on using a fresh, unpredictable IV for every encryption, reusing the IV destroys semantic security and allows attackers to detect repeated messages or shared prefixes, an assumption many systems unfortunately violate. This can also reveal relationships between large datasets.

Nonce Reuse: The Achilles’ Heel of GCM and CTR

AES-GCM is widely trusted, but its security collapses under nonce reuse. Reusing even a single nonce with the same key compromises both confidentiality and integrity: the CTR keystream is repeated, exposing relationships between plaintexts, and the GHASH authentication key becomes recoverable, enabling tag forgeries. This failure mode is not merely theoretical and several real-world TLS and QUIC implementations have been caught reusing GCM nonces, allowing attackers to forge messages and, in some cases, recover plaintext.

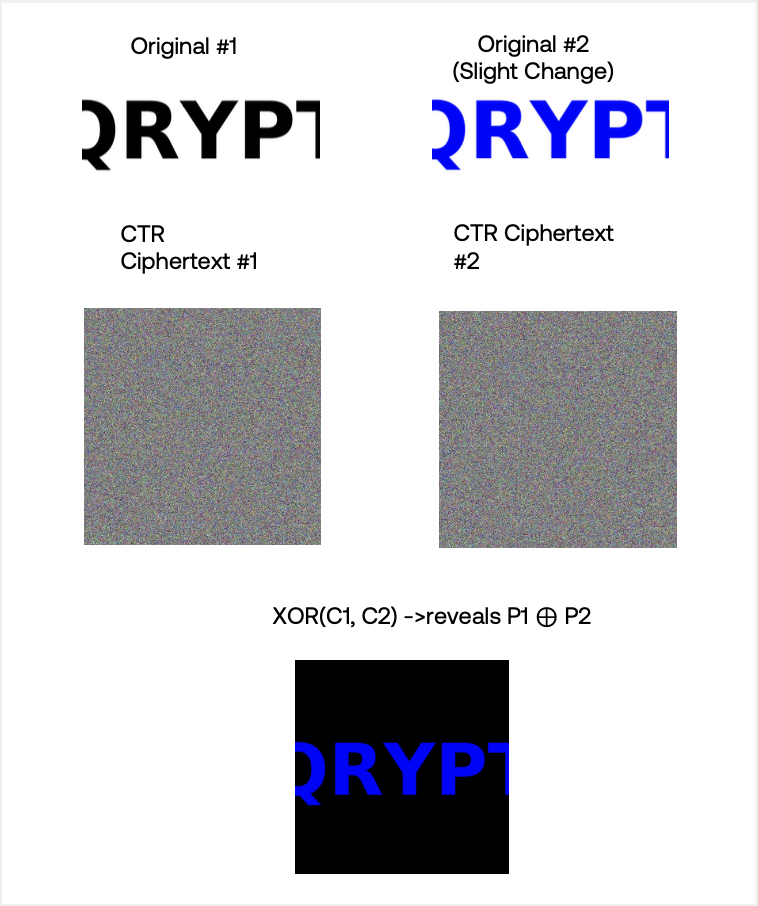

CTR mode suffers the same fundamental weakness. In our plaintext-only AES-CTR demonstration, encrypting two related plaintexts with the same key and counter let us XOR their ciphertexts and immediately obtain the XOR of the plaintexts. If even one plaintext is known or guessed, the other is revealed instantly. No quantum computer is required, just a single nonce misuse.

RNG Failures: When AES Keys and IVs Are Predictable

Randomness failures have repeatedly and catastrophically compromised AES-based systems:

- Debian OpenSSL (2006–2008): A two-line bug reduced entropy so dramatically that AES keys, IVs, and session tokens became guessable.

- Android SecureRandom (2013): Predictable randomness allowed theft of Bitcoin wallets and would have broken AES encryption using the same PRNG, but we’ll never know because that encrypted content is not publicly visible (like on a blockchain).

- Juniper ScreenOS (2015): A backdoored Dual_EC RNG enabled passive decryption of AES-protected VPN traffic.

In each case, AES remained mathematically intact, but systems that treated AES as their security anchor collapsed because other components, like randomness, failed.

AES Does Not Enjoy a Clean 2¹²⁸ Security Margin

A second misconception is the assumption that AES inherently provides a pure 2¹²⁸- or 2²⁵⁶-level brute-force security margin. In practice, no block cipher, even an idealized one, exists in such a vacuum. Real adversaries operate within a broader landscape that includes time–memory–data trade-offs, large-scale preprocessing, hardware specialization, and accumulated ciphertext over decades. Under these conditions, an attacker can invest enormous resources up front to reduce the amount of online work required later. This does not break AES, nor does it suddenly turn 2¹²⁸ security into 2⁶⁴ security, but it does mean that the true effective security margin is not as perfectly clean, rigid, or static as “2¹²⁸ brute-force” suggests.

These nuances matter particularly for long-lived data and systems: encrypted archives stored for decades, medical implants, industrial control systems, satellites, and defense platforms that cannot be updated or re-keyed easily. In these environments, even modest reductions in effective security margins, often caused not by mathematical breakthroughs but by attacker preprocessing and resource asymmetries, become relevant. The long-term threat to AES is not sudden collapse, but that many systems implicitly assume its security margin will remain fixed and inviolable forever, regardless of attacker capabilities or evolving computational landscapes.

Even With “Misuse-Resistant” Modes, AES Remains a Single Point of Failure

Some modern modes like AES-GCM-SIV, AES-SIV, AES-GMAC-SIV, are designed to mitigate common pitfalls such as nonce reuse. They are excellent improvements and eliminate entire categories of catastrophic failures. But they remain niche, rarely deployed, and most critically, they still rely on a single block cipher, a single key, and a single model of confidentiality.

Even misuse-resistant modes do not eliminate:

- failures in key distribution,

- failures in randomness,

- failures in side-channel resistance,

- failures in device security,

- failures caused by cryptographic monoculture.

They reduce blast radius, but they do not remove the single point of failure inherent in systems built around a single algorithm.

Crypto-Agility Is Not the Answer, It Just Buys Time

“Crypto-agility” is often proposed as the architectural fix: if AES or RSA or a PQC primitive fails, we simply swap in a new one. But crypto-agility does not eliminate the problem, it just lets us rotate which algorithm we are betting the farm on. If the newly selected PQC scheme suffers a mathematical break, an implementation flaw, or a side-channel attack, the entire system must be migrated, again. That’s no consolation for any data already harvested and awaiting a publicly disclosed flaw to decrypt it.

Crypto-agility is more musical chairs than resilience. This cycle is to choose one primitive, trust it absolutely, wait for it to fail, and replace it with another. This is the single-point-of-failure problem writ large.

The Future Is Crypto-Redundancy, Not Crypto-Agility

The true future of secure architecture is eliminating single points of cryptographic failure. That means designing systems where the compromise of any one primitive (AES, Kyber, Dilithium, SHA-3, or anything else) does not automatically compromise confidentiality or integrity.

This future includes:

- cryptographic diversity (multiple primitives in parallel),

- threshold and multipath encryption,

- entropy fusion and quantum randomness,

- protocols that remain secure even if one component is flawed,

- architectures that degrade gracefully rather than catastrophically.

Instead of asking, “Which cipher should we trust?”, the right question becomes, “How do we ensure no single cipher can betray us?”

Conclusion

The issue is not that even AES may someday fall to quantum computers. The real problem is that AES and many other primitives fail today when misused, misconfigured, or placed in architectures that rely on them too heavily or entirely. Treating AES as an immutable pillar of security hides the deeper danger: it is a single point of catastrophic failure embedded in nearly every modern system.

The path forward is not crypto-agility but crypto-redundancy, crypto-diversity, and real cryptographic resilience. The systems of tomorrow must remain secure even when individual primitives fail, whether due to quantum breakthroughs, classical cryptanalysis, hardware flaws, or human error. Eliminating single points of failure will ensure cryptographic solutions remain robust and reliable even when algorithms fail. Get Qrypt.